What AI Is For

These days we are constantly bombarded with news of how AI is going to eliminate millions of jobs, be our therapists, cure cancer, heck, it will even create a whole new science! I find myself asking what AI is for? Surely, there is no way it can do all these things, right?

I have plenty more questions about AI. Let me start with the two that have puzzled me the longest: How is AI different from previous software and other technologies we’ve had before? I ask this as someone who previously worked in software engineering and in both software and hardware support. Nevertheless, I have checked more than once if I really know what artificial intelligence is. Here are a couple definitions: Artificial intelligence (AI) refers to the ability of computers and machines to mimic human problem-solving and decision-making capabilities. AI does this by taking in a myriad of data, processing it, and learning from their past in order to streamline and improve in the future. A normal computer program would need human interference in order to fix bugs and improve processes. I have read these and other definitions over and over again for years now and I just can’t for the life of me see how AI is distinct from other software such as operating systems like macOS, or applications like Excel. Hasn’t Excel been solving mathematical and accounting problems that would normally be done by humans for decades? Google Docs have been providing editing suggestions for years. Granted, now it can actually (re)-write passages for you. Isn’t it simply an improvement of its editing capability?

“I recently worked with a group from industry, offering a detailed explanation of a technical AI method. After some time, the lead technical member of the group—who had no previous exposure to AI—exclaimed, “But that’s not intelligence! All you’re doing is writing a complete program to solve the problem.” Well folks, I’m sorry–but that’s all there is. There is no magic in AI. All we do is tackle areas and tasks that people previously were unable to write computer programs to handle. Because we have developed sets of tools and methodologies throughout the years to accomplish this… But there is no universal set of magic ideas.”

When I read this excerpt taken from a recent post by Rodney Brooks, artificial intelligence and robotics scientist and former director of MIT’s AI laboratory, I knew I wasn’t missing something about AI.

As to AI programs not needing human interference to fix bugs and processes, what do you call the gazillion developers that work at OpenAI, xGrok, Meta etc…? To be sure I put the question to AI itself: “who fixes bugs in ChatGPT”

xGrok says: “Bugs in ChatGPT, developed by OpenAI, are primarily identified, triaged, and fixed by OpenAI’s internal engineering and software development teams. These teams handle everything from server-side issues and model performance glitches to user interface problems, often using a combination of automated testing, user reports, and iterative deployment processes.

ChatGPT says:“The people who fix bugs in ChatGPT are mainly the engineers and researchers at OpenAI…but they also rely on external security researchers and user reports.”

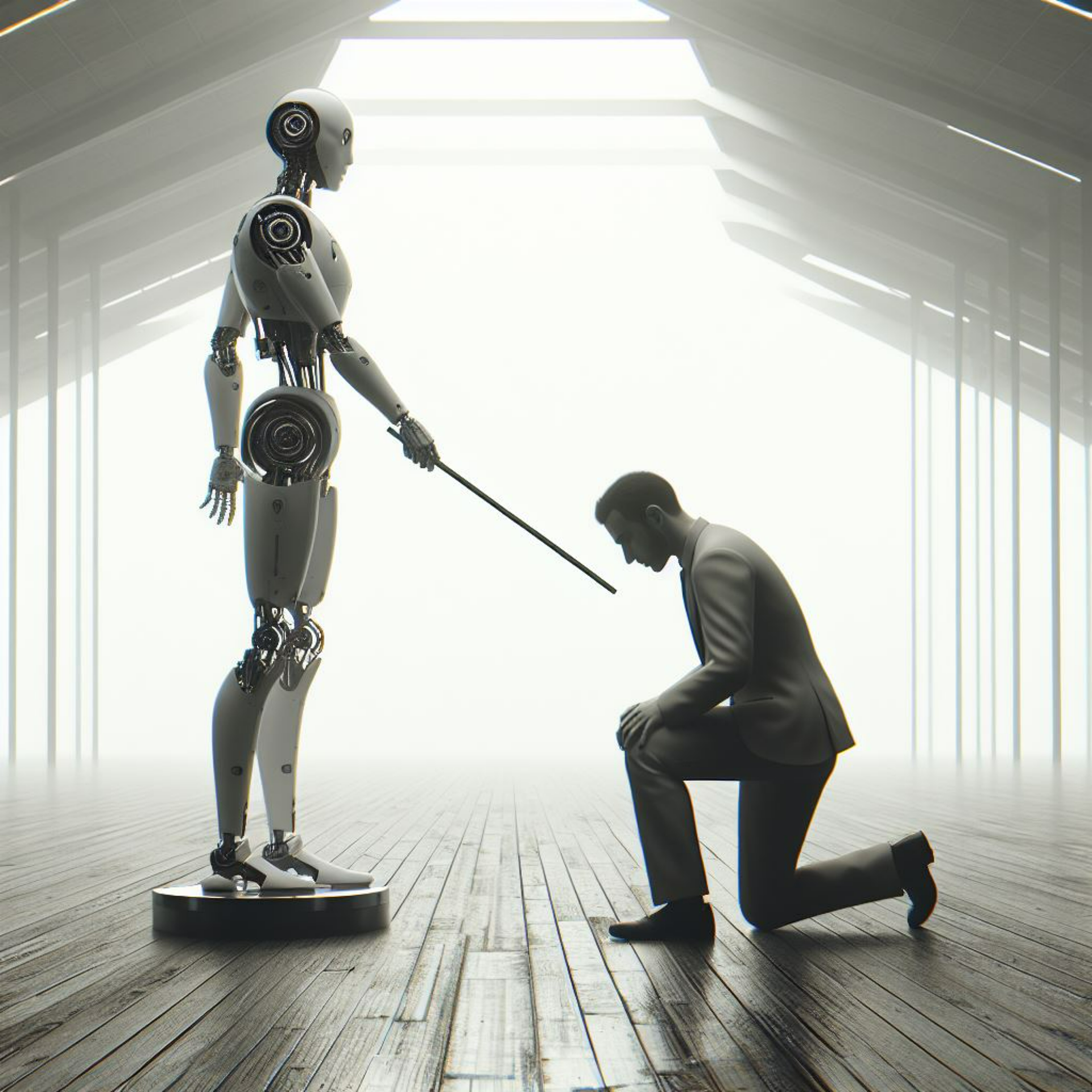

Which leads me to my second most perplexing question: Why is AI referred to as if it is an autonomous entity? We don’t perceive a plane, a tractor or the telephone as independent beings and these are technologies that have radically transformed our lives. AI as a technology has yet to prove its touted potential yet it is extolled as the messiah. Perhaps it is because AI is a software technology (as opposed to hardware like the aforementioned ones) that can process huge amounts of information and do complex computations. But then, how can a software that eats and breathes a shit ton of data created by humans and follows their instructions be self-determining? Not even humans who created AI are autonomous entities, we exist because we are created by our parents, we grow and are sustained by food and mental stimuli produced by other humans.

Human Replacement

Even if it were possible for AI to be autonomous, why would we want to create such a system? It could be because we wish to create an advanced version of ourselves. Max Tegmark calls this version Life 3.0. In his book titled Life 3.0: Human Beings In The Age of Artificial Intelligence he describes three stages of life. He defines life as a combination of hardware and software. Our DNA is the hardware (the body), and the software is behaviour and instincts. Life 1.0 is unable to design its hardware and software, it is determined by DNA and evolution. Bacteria are an example of this. Life 2.0 is a species whose hardware has evolved and it can largely design its own software through learning. Humans fit into this category. Our software is the knowledge we acquire by learning to read, write, build bridges, cure illnesses, etc. Through learning we have managed to a limited extent change part of our hardware, e.g. we can perform heart replacement and breast enlargement. But ultimately our human hardware has evolved very little in thousands of years, and we are still limited by how long we live and diseases. However, Life 3.0 can overcome the limitations of Life 2.0 as it can design both its hardware and software. It is the master of its own destiny. An example of this is AI, particularly its branch of artificial general intelligence (AGI) with capabilities beyond human level.

Some argue that we need AI to release us from the toil of life so we can have more leisure time. Bill Gates asserts that in the next decade AI will replace humans for most tasks, which would enable us to work only 2 days a week. Unless you are in certain professions like nursing and baseball. Apparently AI won’t be able to feed you your medication or draw blood, nor do people want to watch machines playing baseball.

Another reason why we would need AI to replace humans is that human workers are expensive. Labour, on average, accounts for about 70% of business costs. Imagine a world where you can have labour on tap 24/7, without toilet or lunch breaks, no holidays or sick days off and no health insurance benefits. Just picture the profit margins in such a world! Well, many companies have started to imagine this world. Amazon announced 14000 job cuts citing AI as the main cause. Salesforce recently eliminated 4000 positions as a result of “the benefits and efficiencies” of AI. It turns out that their optimism is premature. Some of the companies that have carried out AI influenced layoffs have had to rehire humans. Klarna in 2024 fired 700 staff as its AI Assistant could replace them, but almost a year later it had to rehire them all back because it discovered that real people are actually better at dealing with real people! Even OpenAI admitted that the best AI models are no match for human coders and are unable to solve the vast majority of coding tasks. Another study by the non-profit organisation, Model Evaluation and Threat Research (METR) found that when programmers use AI assistant programming tools, they are slower. They spend 19% more time as they have to review and correct AI output.

I find it fascinating that there are those who believe that we can create a better or more advanced version of us humans. I totally get it. I too would love to see a better us, less violent, more aware, less greedy, more kind, less lazy, more hardworking… Nevertheless, I doubt it can come from us. After all, a foundational principle of computer science is “Garbage in, garbage out”, meaning that the quality of your output is based on the quality of the input. AI can only reflect the input we give it. For example, LLMs hallucinate because we humans, their creators, also hallucinate. AI can also create beautiful images because we can too.

Human Creation

Regardless of whether we will achieve the goal of creating an advanced human replacement, there are two things I’m certain of. I don’t say this because I have a crystal ball, I say it based on historical evidence. First thing, AI will not eliminate most jobs nor do most things as Bill Gates predicted. I believe like any major technology AI will transform what we do. An example is the arrival of tractors, harvesters and other mechanical farming equipment. Before they were invented in the 19th century, about 60% of the population worked in agriculture, now it is less than 10%. The jobs the machines eliminated from farming were created elsewhere such as in factories, and shops. Another example is spreadsheet apps such as Lotus 123 and Excel. When the spreadsheet was first introduced in the early 1980s it was predicted that it would eliminate bookkeeping jobs. Yes, it did. However it increased the number of accountants. According to a study by Morgan Stanley, in the US there was a reduction in the number of people working as bookkeepers and accounting/auditing clerks (from ~2 million in 1987 to just above 1.5 million by 2000) but there was a significant increase in Americans employed as accountants/auditors (rising from ~1.3 million in 1987 to ~1.5 million in 2000) and management analysts and financial managers (from ~0.6 million in 1987 to ~1.5 million in 2000). This is because the spreadsheet increased the complexity of accounting tasks that could be done and thus required more skilled workers.

Another word for complexity is messy. Which brings me to the second thing I’m sure of. Humans will still be needed to clean up the mess we, hmmm, AI will generate. This has been true of many technologies regardless of how beneficial they are. Take large scale generation and distribution of electricity. It completely transformed our lives by enabling the creation of comforts such as light bulbs, refrigerators and cars, and industries like telecommunications, computing and aviation. However, it has also had a detrimental impact on our environment and health. Electricity is generated mostly from fossil fuels, as of 2024 it is at 81%. Fossil fuels are the biggest producers of greenhouse gases with detrimental impact on both our environment and health (it is estimated that air pollution is the cause of ~7 million deaths per year). We’ve been trying to replace fossil fuel electricity generation with cleaner alternatives like solar, wind and nuclear for decades. Even though these are cleaner as far as emissions go, they come with a different type of mess. Nuclear energy produces hard to discard toxic waste. And solar panels have a recyclability problem, currently only 10% are recycled and the rest ends up in landfills because the way the panels are built makes it difficult and costly to recycle. So in all of the above examples we have a whole bunch of humans working to clear up the mess the technologies we invented have generated. Which brings to the next thing I find bewildering about AI, why does it require so much electricity?

A MIT Tech Review report shows that from 2005 to 2017 the amount of electricity needed to run data centers remained flat thanks to increases in efficiency, despite the construction of armies of new data centers to serve the rise of cloud-based online services, from Facebook to Netflix. The introduction of energy intensive AI hardware starting in 2017 in data centres in the US caused their national share of electricity consumption levels to increase from 1.9% in 2018 to 4.4% in 2023. Their share is forecasted to increase to between 6.7% and 12% by 2028 depending on levels of efficiency attained. Nevertheless, the Tech Review report asserts that these predictions do not reflect the full picture. The most intensive part of AI isn’t training the models, it is inference (how consumers query the models), estimated to command about 80-90% of computing power. To put this into perspective, training OpenAI’s GPT-4 consumed 50 gigawatt-hours of energy, enough to power San Francisco for three days. How much energy is consumed to query this model or any other depends on several factors such as what type of device used, what time of the day, which energy grid, and location of data centre. Tech companies don’t make this information available as they are classed as trade secrets. What is clear is that an enormous amount of electricity is needed hence why tech companies like Amazon, Meta and Microsoft are firing-up nuclear power plants. But it will take years before any energy materializes from these plants. In the meantime, these data centres have to rely on more carbon intensive sources (like gas and coal) of electricity. As it is, the carbon intensity of electricity used by data centers is 48% higher than the US average.

This is what confuses me, why create AI systems that need so much electricity (they are other resources too that AI is guzzling up like water but we will save this for another time) that will most likely come from fossil fuels? The problem of intermittency makes renewables such as wind and solar unsuitable since data centres need to operate 24/7 without interruptions. We have known about the impact of burning fossil fuels on the environment for over a century. It could be argued that the field of AI is new and needs time to become more efficient and resolve the issues of needing enormous amounts of resources. AI research is said to officially start in 1956. So there has been plenty of time and available information to inform a more efficient system design. The emergence of Deepseek’s R-1 model earlier this year shows that with intent a cheaper and more efficient AI model is possible. R-1 was created for $6 million vs OpenAI’s ChatGTP4 which cost $100 million and it leverages 8-bit precision for calculations instead of the standard 32-bit, reducing the computational power and energy needed.

Don’t get me wrong, I’m not anti-AI. I use it every day. I appreciate its language translation capability. It is way faster at coming up with the right Portuguese verb conjugations (there are so many) than I am. It is also more effective at spotting typos and grammar errors. It has made Google and Duck Duck Go search engines more powerful. What I object to is making AI out to be more than it is. It is a tool like other tools we’ve invented before, it will have benefits, drawbacks and limitations. I also find the claim that it will do most tasks humans can and better to be highly suspect. What do we think will happen to billions of idle humans while AI is busy working away? Just look at a group of idle teenagers and what they get up to, multiply that by millions. We can’t all become baseball players or spend all our time working out in the gym. Actually, this is an area I for one would love for AI to tackle. Why can’t they develop AI that will do for me all the things I dislike doing like working-out but I still get the physical and mental benefits? Or an AI system that will unload the dishwasher and washing machine for me? Why can’t it solve big problems like income inequality or reducing greenhouse gas emissions instead of adding to it? The fact is that we don’t know exactly what this tool called AI will do as it is still evolving. But we can decide what it will do. We can make it beneficial for us and not to replace us. Further, if we continue to treat it like its own entity we miss this opportunity. As the computer scientist, Janon Lanier, put its:

When you treat the technology as its own beneficiary, you miss a lot of opportunities to make it better. I see this in AI all the time. I see people saying, “Well, if we did this, it would pass the Turing test better, and if we did that, it would seem more like it was an independent mind.”…

One example is that we’ve deliberately designed large-model AI to obscure the original human sources of the data that the AI is trained on to help create this illusion of the new entity. But when we do that, we make it harder to do quality control. We make it harder to do authentication and to detect malicious uses of the model because we can’t tell what the intent is, what data it’s drawing upon. We’re sort of willfully making ourselves blind in a way that we probably don’t really need to. (From the Gray Area Podcast)